Pentaho: Versatile Data Engineering for All Needs—ETL, Reverse ETL, ELT, Data Lakes, Big Data, Data Quality, Reporting, Visualization & Data 360

When it comes to generating business reports, organizations often face a plethora of choices. Solutions like JasperReports, Crystal Reports, Microsoft Reporting Services, ActiveReports for .NET, HTML to PDF etc.

Senthilnathan Karuppaiah

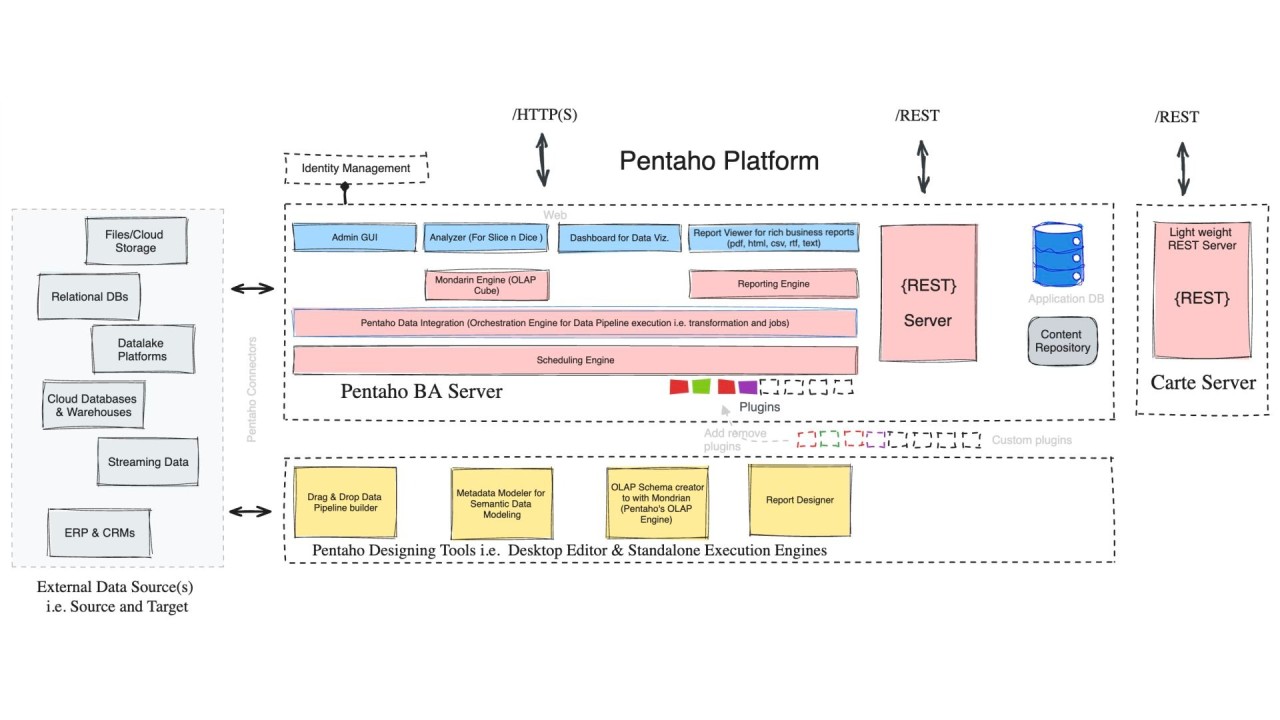

Pentaho remains a powerful and relevant tool in the data integration and analytics space. Its comprehensive features, cross-platform support, and strong community make it an excellent choice for organizations and developers alike. Whether you want to build complex data pipelines, design insightful reports, or dive into data analysis, Pentaho has the tools to help you succeed.

In today's fast-changing data world, having a reliable and flexible tool for data integration and analytics is essential. Pentaho stands out as a powerful solution that remains important, even with many new data products entering the field—not only in ETL but also under the umbrella of data fabric and other modern data management concepts.

Interesting Facts About Pentaho

::list{type="success"}

- Origin of "Data Lake": Did you know the term "data lake" was coined by James Dixon, the founder and former CTO of Pentaho? In 2010, he introduced this idea to describe a central place where all structured and unstructured data can be stored at any scale.

- Early Support for Big Data: Pentaho was one of the early adopters of big data technologies in its offerings. Pentaho announced support for Hadoop as early as 2010, recognizing the need for flexible data management solutions that could handle large volumes of semi-structured and unstructured data. This support made Pentaho a pioneer in making big data accessible to companies struggling with large-scale data challenges.

- Widely Mentioned in Literature: Pentaho is featured in many data engineering and ETL books, showing its importance and wide use in the industry. Some notable books include: ::

Why Pentaho Remains Relevant?

::list{type="success"}

- Comprehensive Tool Suite: Offers data integration (PDI), reporting, analytics, dashboards, and data mining—all in one platform.

- Cross-Platform Compatibility: Java-based architecture allows it to run on Windows, Linux, and macOS, giving flexibility across different operating systems.

- User-Friendly Interface: Easy drag-and-drop design tools make complex data integration and reporting tasks more accessible to users with different technical skills.

- Extensibility and Customization: Open-source roots allow for customization and integration with other systems, supported by a strong community contributing plugins and improvements.

- Big Data and Cloud Integration: Pentaho was an early supporter of big data technologies like Hadoop and Spark, integrating them into their platform to meet the demands of modern data architectures.

- Lightweight Data Pipeline (Carte): Includes Carte, a lightweight engine for remote execution and management of ETL jobs, enabling distributed processing and scalability.

- Modern Code-Based Pipelines: Offers code-based data pipeline capabilities, similar to tools like DBT, allowing for CI/CD integration, advanced scripting, and automation in data transformation processes.

- Multi-Modal Deployment: Supports various deployment options, including on-premises, Docker, Kubernetes (K8s), and hyper-scalers, making it adaptable for different IT environments and big data platforms. ::

Getting Started with Pentaho Developer Edition

If you are interested in exploring Pentaho, the Developer Edition (earlier called the Community Edition) is a great place to start. It is free to use and provides access to most of the platform's features. You can build Data Pipelines, Design Reports, and Create Dashboards without any initial investment.

I will be writing a series of real-life example data pipelines, data engineering, and reporting using Pentaho. Stay tuned to learn how to leverage this powerful platform in practical scenarios.

References & Additional Links

::list{type="success"}